Despite the potential risks of DDOS attacks and other security threats, many publishers fail to recognize the problem and turn a blind eye to bot traffic, anxious to make traffic numbers more good-looking. In reality, the absence of effective bot management can put the entire project at risk, hurt user experience, or turn advertisers away from the site’s inventory.

In a nutshell, bots represent applications that perform automated repetitive tasks at scale, such as webpage ranking for SEO purposes, aggregating content, or extracting information. Bots are often distributed through a botnet, i.e. connected copies of the bot software running on multiple machines with different IP addresses. From the website owner’s perspective, bots can be good, bad, or irrelevant. Let’s find out what they do on your website and how you can control the situation.

Good bots and how to allow them

Good bots are built to complete missions aligned with publishers’ goals. One of the most common types is spider bots, which are used by search engines or online services to discover your content, rank pages, and make your website available through search results. Another example is comparison bots, which help users find your products or offers through price comparison services. Finally, data bots are aimed to aggregate content on a particular topic or provide updates about new posts, weather forecasts, exchange rates, etc.

You can manage good bots using a robots.txt file which you place in your home directory. Making necessary changes in this file, you can whitelist spiders you are interested in or specify the exact pages you want them to crawl.

To avoid a biased audience measurement, you can exclude all hits from good bots in Google Analytics. You can also set the specific list of IPs to ignore spiders and other good bots with known sources in the analytics reports.

Some good bots may also be irrelevant to your business goals. For example, certain bots may crawl your website searching for content categories unrelated to your project. You may also want to keep out these irrelevant bots by blocking them by their IPs or excluding them from the allowlist for bots.

Prevent malicious bot traffic and bot assaults

Bad bots aim to cause damage to your website and can come in a variety of types. Perhaps, the most harmful are masked as human visitors and bypass website security systems to bring the site down. Imposter bots are disguised as good bots and scrape web content to post it on other sites, harvest users’ credentials or email addresses, violate a website’s terms of service, or do other malicious deeds. From last year’s study, it is known that more than half of bad bots claim to be Google Chrome. Fraud bots, on the other hand, operate under the radar and try to steal information, such as personal data, credit card information, etc., or even hijack data servers.

Common examples of malicious bots are DOS and DDOS attacks. A Denial of Service (DOS) attack uses a single machine that targets website vulnerability or sends a large number of packets or requests. A DDOS attack, however, involves multiple connected machines or botnets for the same purposes. The result of these bot assaults is the target resource being exhausted to serve its intended users. Very often, DDOS attacks are executed to make business owners pay ransom for their infrastructure; these bots are called ransomware.

In some cases, a high bounce rate and extraordinary high pageviews can indicate the continued persistence of bad bots as human behavior usually produces lower bounce. More generally, to detect malicious bot traffic, you have to look at all network requests the website is receiving and analyze log files trying to identify suspicious requests, unlikely behavior, or anomalies. For example, you may spot a spike in traffic from an unusual region, a lower than usual session duration, etc.

For sure, detection does not prevent attacks. Once a website’s vulnerabilities and footprint of malicious bots are spotted, you have to employ other tools and mechanisms to block them. Depending on the business logic of your site, you can protect exposed APIs, add CAPTCHAs at some points, limit network traffic by IP or location, put a cap on how often a visitor can send requests, etc.

For large web projects, it is necessary to implement a bot management system that allows granular differentiation of bot traffic. Some popular bot management solutions are, just to name a few, Cloudflare, ClickGUARD, Radware, and Akamai.

Impact on advertising

Bots may cause a significant drain on marketing budgets and skew campaign data. Also, some cloud-based botnets can generate false ad impressions or clicks, which is known as click fraud. To maintain trust in the advertising ecosystem, publishers have to provide accurate data about their audience and block click fraud activities on their sites. So far, if you monetize a website through ads and there is some sort of click fraud or significantly inflated traffic data, all major ad providers are obliged to ban the site from the network.

At MGID, we are proud to be recognized for being able to detect bot traffic and advertising frauds. In 2020 MGID has received TAG Certified Against Fraud Seal for its proprietary fraud protection solution. We also use the Anura fraud detection tool to ensure traffic quality. Also, we have an in-house team of analytics who manually check traffic on a regular basis.

Final thought

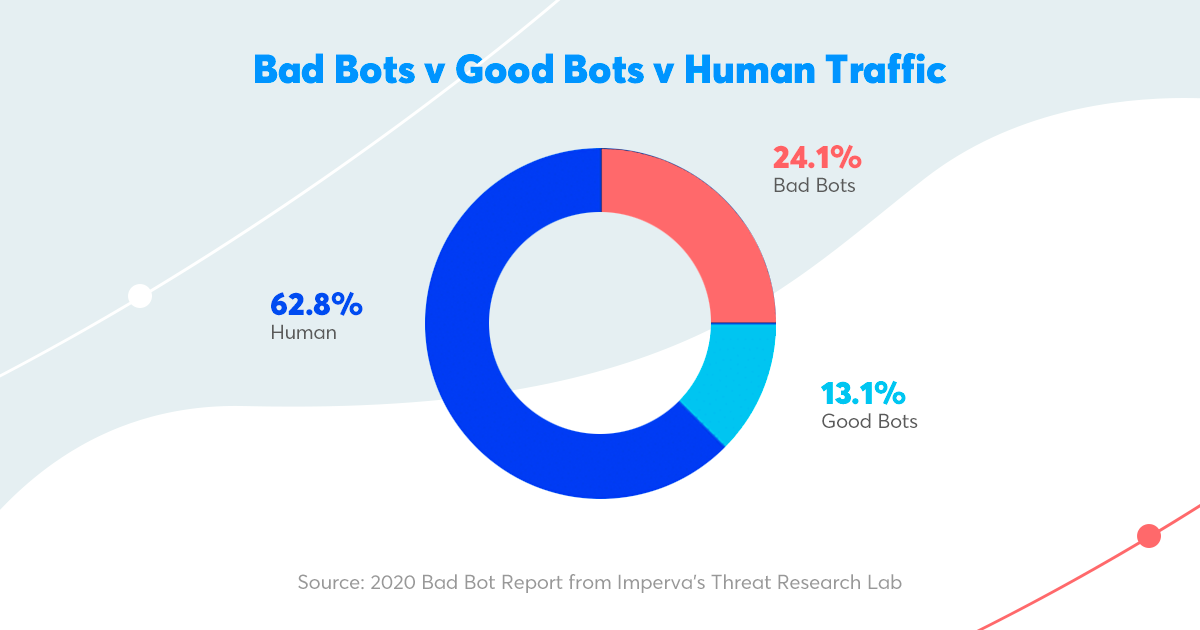

Today, about 40% of Internet traffic is bot traffic, with malicious bots being probably one of the biggest headaches for many publishers. To protect your site from unwanted bot traffic, we recommend using one of the modern bot management solutions, such as Cloudflare, ClickGUARD, Radware, or Akamai. You can also control good bots, such as spiders or crawlers, by changing settings in the "robots.txt" file.